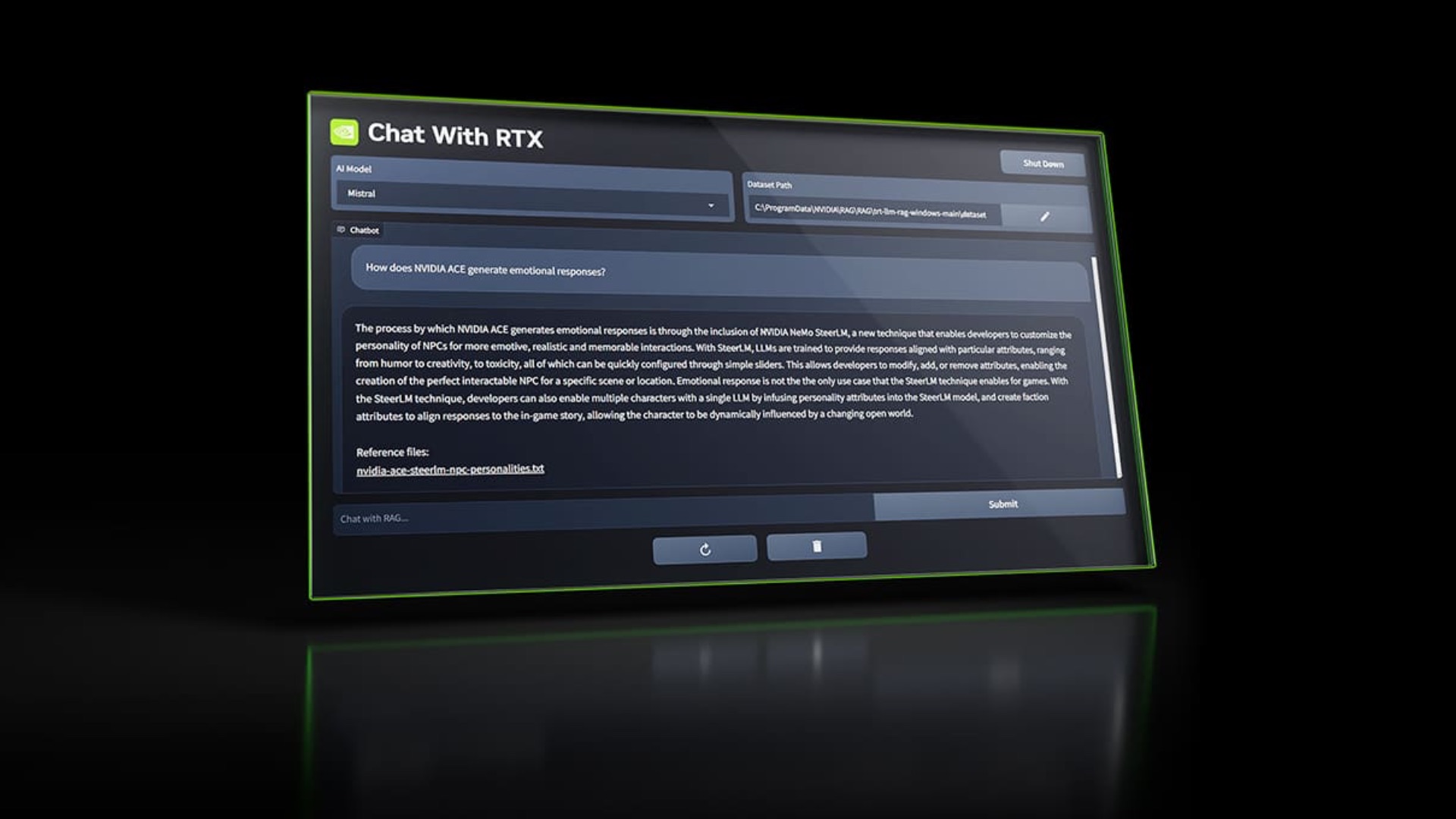

Nvidia has released the Chat with RTX application for Windows. It can run two large language models (LLMs), Llama 2 and Mistral, right on RTX 30 series and RTX 40 series graphics cards and answer your queries about the content, such as documents, photos, and videos, that you connect to the app. It can also load transcriptions of a YouTube video and using that, it can answer your queries about the video.

Basically, Chat with RTX allows you to run LLMs locally on your PC without the need for the internet, which, according to Nvidia, should get you “fast and secure results.” The company says that it is a “demo” app, and by that, we assume that the brand means that it is a beta version of the app. Fortunately, the app is available for free. Its size is 35GB and you can download it right from Nvidia’s official website.

Chat with RTX is the first app of its kind and it makes LLMs more accessible and opens up a whole new world of possibilities. Nvidia could roll out many other features to the app and make it useful in ways unimaginable. It has been only one and a half years since OpenAI launched ChatGPT, and we are already seeing so many innovations in the world of GenAI. The future of GenAI is going to be very interesting.